One of the most popular use cases for NGINX Plus is as a content cache, both to accelerate local origin servers and to create edge servers for content delivery networks (CDNs). Caching can reduce the load on your origin servers by a huge factor, depending on the cacheability of your content and the profile of user traffic.

NGINX Plus can cache content retrieved from upstream HTTP servers and responses returned by FastCGI, SCGI, and uwsgi services.

NGINX Plus extends the content caching capabilities of NGINX Open Source by adding support for cache purging and richer visualization of cache status on the live activity monitoring dashboard:

Why Use Content Caching?

Content caching improves the load times of web pages, reduces the load on your upstream servers, and improves availability by using cached content as a backup if your origin servers have failed:

- Improved site performance – NGINX Plus serves cached content of all types at the same speed as static content, meaning reduced latency and a more responsive website.

- Increased capacity – NGINX Plus offloads repetitive tasks from your origin servers, freeing up capacity to service more users and run more applications.

- Greater availability – NGINX Plus insulates your users from catastrophic errors by serving up cached content (even if it’s stale) when the origin servers are down.

NGINX Plus and NGINX provide a consolidated solution for your web infrastructure, bringing together an HTTP server for origin content, application gateways for FastCGI and other protocols, and an HTTP proxy for upstream servers. NGINX Plus adds enterprise‑grade application load balancing, consolidating the front‑end load balancers in your web infrastructure.

In Detail – Content Caching with NGINX Plus

Cached content is stored in a persistent cache on disk, and served by NGINX Plus and NGINX in exactly the same manner as origin content.

To enable content caching, include the proxy_cache_path and proxy_cache directives in the configuration:

# Define a content cache location on disk

proxy_cache_path /tmp/cache keys_zone=mycache:10m inactive=60m;

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://localhost:8080;

# reference the cache in a location that uses proxy_pass

proxy_cache mycache;

}

}

By default, NGINX Plus and NGINX take a safe and cautious approach to content caching. They cache content retrieved by a GET or HEAD request, without a Set-Cookie response, and cache time is defined by the origin server headers (X-Accel-Expires, Cache-Control, and Expires). NGINX Plus honors the Cache-Control extensions defined in RFC 5861, stale-while-revalidate and stale-if-error.

Each of these behaviors can be extended and fine‑tuned using a range of directives. For a comprehensive introduction, see the NGINX Plus Admin Guide.

Instrumenting the Cache

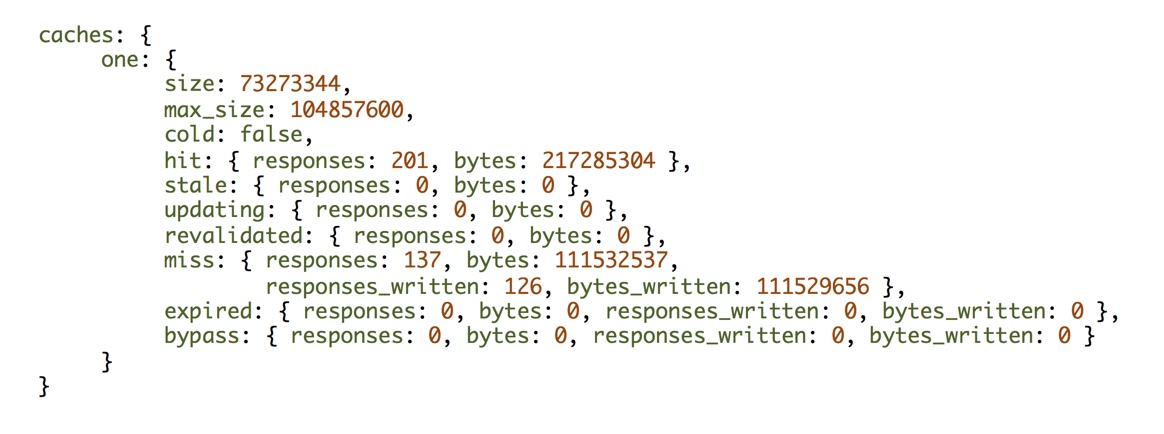

NGINX Plus’ live activity monitoring API reports a range of statistics you can use to measure the utilization and effectiveness of your content caches:

The JSON data includes full information on the cache activity.

Managing Stale Content

By default, NGINX Plus and NGINX serve cached content for as long as it is valid. Validity is configurable or can be controlled by the Cache-Control header set by the origin server. After the validity period, cached content is considered stale and must be revalidated by checking that the cached content is still the same as the content found on the origin server.

Stale content might never be requested by a client, so NGINX Plus and NGINX revalidate stale content only when it is requested by a client. This can be performed in the background, without interrupting or otherwise delaying the client request by immediately serving the stale content. Stale content is also served when the origin server is unavailable, providing high availability at times of peak load or long outages of the origin server.

The conditions under which NGINX and NGINX Plus serves stale content can be configured with directives or by honoring the values found in the origin server’s Cache-Control header, stale-while-revalidate and stale-if-error.

Purging Content from the Cache

One of the side effects of content caching is that content updates on the origin server do not necessarily propagate immediately to the cache, meaning that clients might continue to be served the old content for a period of time. If an update operation changes a number of resources at the same time (for example, changes a CSS file and referenced images), it’s possible for a client to be served a mixture of stale and current resources, making for an inconsistent presentation.

With NGINX Plus’ cache‑purging feature, you can address this issue easily. The proxy_cache_purge directive enables you to immediately remove entries from NGINX Plus’ content cache that match a configured value. This method is most easily triggered by a request that contains a custom HTTP header or method.

For example, the following configuration identifies requests that use the PURGE HTTP method, and deletes matching URLs:

proxy_cache_path /tmp/cache keys_zone=mycache:10m levels=1:2 inactive=60s;

map $request_method $purge_method {

PURGE 1;

default 0;

}

server {

listen 80;

server_name www.example.com;

location / {

proxy_pass http://localhost:8002;

proxy_cache mycache;

proxy_cache_purge $purge_method;

}

}

You can issue purge requests using a range of tools, such as the curl command in the following example:

$ curl -X PURGE -D – "http://www.example.com/*"

HTTP/1.1 204 No Content

Server: nginx/1.5.12

Date: Sat, 03 May 2014 16:33:04 GMT

Connection: keep-alive

As shown in the example, you can purge an entire set of resources that have a common URL stem, by appending the asterisk (*) wildcard to the URL.

More Information

NGINX Plus inherits all of the caching capabilities of NGINX. For details, see the NGINX Plus Admin Guide and the reference documentation.