The Commodification of Operations

In the days of yore, transmissions in cars were manual. Some might have referred to them as a "stick" thanks to the mechanism by which you shifted gears. In those days, an automatic transmission was something special that you often had to order. Its name comes from the way in which the car automatically shifted gears for you. Which, to be honest, is kind of nice. After all, there are a lot of variables to manage when you're trying to shift gears manually.

Today, automatic transmissions are the standard. Manual is a mystery to most. I tried to teach each of my three oldest children how to drive one. I do not recommend making the attempt, if you're considering it. Not if you like a working transmission in your car.

I mention this shift in expectations and standards as a prelude to a discussion on operations. Mostly that of the network and application service infrastructure, but it holds true outside those demesnes as well.

Operational Expectations

The shift is toward automation, yes, but it's also a shift in expectations with respect to the knowledge required to operate infrastructure.

To go back to my transmission analogy, if you're driving a manual transmission you have a lot of variables to manage. You need to coordinate the clutch and the gas. You need to listen to the engine and recognize when to shift gears. You also have to know what gear you're in, and what gear you're going to, and how to move the "stick" to get there.

These mirror the kinds of knowledge you need to manually deploy network and application service infrastructure. You need to know a lot about how the network operates in order to ensure traffic gets from one place to the other.

The adoption of cloud began the transition of expectations away from this 'standard'. While you still have to understand some basic networking concepts, you don't have to necessarily understand how they work. With the introduction of containers, the expectation has moved even further to the right - with little or no requirement to even think about IP addresses.

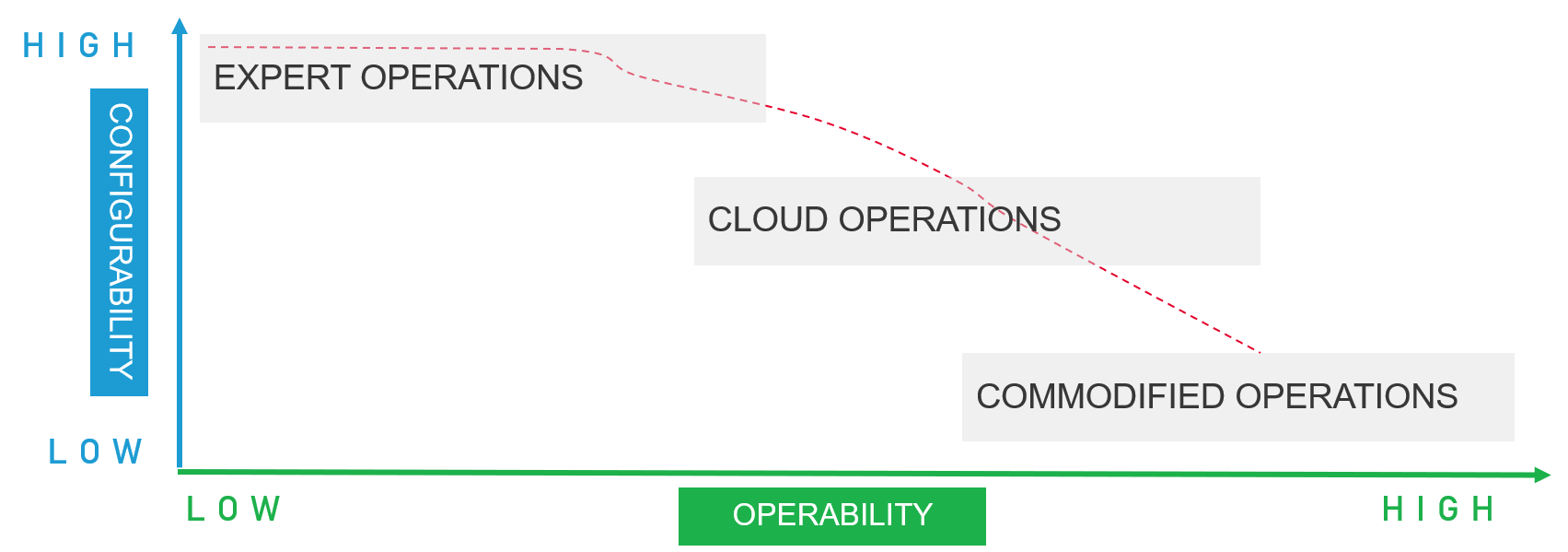

What this does is shift operational expectations. We're moving from an expert operations economy to a commodified operations economy. Today, the operational aspects of deploying network and application service infrastructure are expected to be accessible to a broader set of roles within the organization. To get there, simplification is necessary.

To be specific, operational simplification. It isn't enough to deliver network and application services via self-service options, it has to be usable by those who will, well, make use of them. It has to be even simpler than it is now.

That can mean sacrificing configurability for operability.

Configurability versus Operability

As with cloud and containers, the abstractions laid atop network and application service infrastructure extend to the use of those abstractions. By that I mean the vernacular. The terminology. The data model. The actual configuration.

What I mean by that, for those non-programmer types reading, is that each construct has a bunch of attributes associated with it that make up the "object". A Virtual Server object has an IP address, a Pool of applications, events, a name, and a whole bunch of other characteristics. Some of those characteristics are actually objects - or lists of objects. Traversing these constructs can be complex. Because configurability is imperative when fine tuning infrastructure. You want the ability to tweak very specific characteristics - like toggle Nagle's Algorithm or mess with TCP window size - to optimize for performance or capacity.

Now, in a commodified operations model - which is where we're heading - operability is more important than configurability. Fewer options, faster uptime.

But this does not mean simply take away options. Just eliminating the ability to tweak options only meets the basic requirements for operability; it doesn't meet the expectations of a simplified operational experience. You still need to understand the object model. What's necessary is to abstract the model into something simpler. Say, reduce a Virtual Server to an IP address, a name, and a list of application instances.

Commodification Requires Standardization

This is a significant undertaking because there is no common model upon which application service infrastructure operates. The way in which virtual servers, ingress control, and security policies are represented varies from product to product, from service to service.

Ops - whether on the development side or the IT side - is effectively required to understand a plethora of models in order to deploy and operate the on average fourteen application services used to deliver and secure apps. There is a lot of variation, which in the past has led to myriad certificates required to verify the expertise required to manage these services.

The other operational shift is away from this approach. It is an expectation of simplicity, of ease-of-use and commonality across offerings as a way to reduce the technical debt incurred by device-specific operating models. It's an expectation of standardization; not on protocols and network behavior, which already exists in the network infrastructure space. But of how we represent those protocols and network behaviors.

We see this playing out in surveys on containerization, in which necessary skills are cited as a major inhibitor to adoption by nearly one in four (24%) respondents along with 33% who said it was a moderate inhibitor. Infrastructure - in which containers and container orchestration systems are certainly included given their prevalence in production environments today - is being driven by a lack of skills and desire for speed toward commodified operations.

That desire and need is seen in the organic adoption of Kubernetes resource files that attempt to describe said infrastructure services. These resources force upon all ops the use of a common (commodified) data model (format) for describing the deployment and configuration of a given service. Given that IT operations is the number one driver of container adoption (35%) in organizations today (according to Diamanti's 2019 Container Adoption Survey) - nearly twice as influential as developers (16%) and four times that of integrated DevOps teams (9%) - it is important to recognize this shift and consider carefully how new infrastructure fits (or doesn't fit) into a commodified operations environment.

With or without official (workgroup or foundation) efforts, commodification will drive a de facto standard into operations. And that de facto standard will be one that emphasizes operability over configurability.